Go 调度模型(一)

想清楚了就去做,做的时候不要再回头想。

OS Scheduler

在操作系统中保存了运行的进程列表,以及进程的运行状态(运行中、可运行及不可运行)。当进程运行时长超过了被分配的时间片(比如每10ms),那么该进程会被系统抢占,然后在该CPU上执行别的进程。所以,OS的调度是抢占式的,可能抢占策略略有不同。

当进程被抢占时,需要保存该进程运行的上下文,并被重新放回到调度器,等待下一次被执行。

Golang Scheduler

Goroutine scheduler

The scheduler’s job is to distribute ready-to-run goroutines over worker threads.

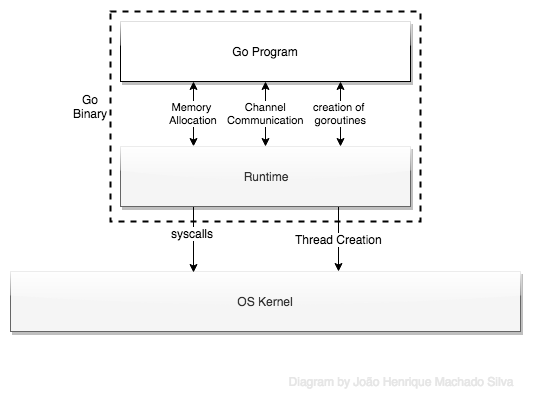

如图所示,OS层看到是只有Go进程以及运行的多个线程,而Goroutine本身是被Golang Runtime Scheduler调度管理的。

对OS而言,Go Binary是一个系统进程。内部Go Program对系统API的调度都是通过Runtime level解释来实现。Runtine记录了每个Goroutine的信息,在当前进程的线程池中按照顺序依次调度Goroutine。

Golang在Runtime内部实现了自己的调度,并不是基于时间切片的抢占式调度,而是基于Goroutines的协作式调度,目的就是要让Goroutine在OS-Thread中发挥出更多的并发优势。所以,在Runtime过程中,只有当正在运行的Goroutine被阻塞或者运行结束时,别的Goroutine才会被调度。常见的阻塞情形包括:

- 阻塞的系统调用方式,比如文件或网络操作

- 垃圾自动回收

整体而言,Goroutine的数量大于Threads数量会更有优势,这样当其他Goroutine阻塞时,别的Goroutine就会被执行。

Goroutine

G用于表示Goroutine及它所包含的栈和状态信息。Goroutine存在于Go Runtime的的虚拟空间,而非OS中。

// src/runtime/runtime2.go

// 以下结构体精简了很多字段

type g struct {

stack stack // offset known to runtime/cgo

m *m // current m; offset known to arm liblink

sched gobuf

stktopsp uintptr // expected sp at top of stack, to check in traceback

param unsafe.Pointer // passed parameter on wakeup

atomicstatus uint32

stackLock uint32 // sigprof/scang lock; TODO: fold in to atomicstatus

}

代码中,通过追加go前缀遍可以创建groutine。

go func(){

}

Machine

物理执行的单元,用于表示OS Threads。M包含当前运行的Goroutine信息等。

// src/runtime/runtime2.go

type m struct {

g0 *g // goroutine with scheduling stack

// Fields not known to debuggers.

goSigStack gsignalStack // Go-allocated signal handling stack

curg *g // current running goroutine

p puintptr // attached p for executing go code (nil if not executing go code)

spinning bool // m is out of work and is actively looking for work

blocked bool // m is blocked on a note

freeWait uint32 // if == 0, safe to free g0 and delete m (atomic)

alllink *m // on allm

schedlink muintptr

createstack [32]uintptr // stack that created this thread.

thread uintptr // thread handle

freelink *m // on sched.freem

}

Processor

P记录了G和M的相关信息,P需要调度M来让M执行G的代码。在P中包含了本地可运行的Goroutine队列,这样的设计也是为了优化访问全局Goroutines队列频繁加锁的性能问题。当一个新的G被创建,它会被追加在相应P队列的末尾,以保证最终会被执行。

此外,当P没有可运行的Goroutine处理时,它会随机从其他P的Goroutines队列末尾取一半G用于自己消费。

// src/runtime/runtime2.go

type p struct {

lock mutex

id int32

m muintptr // back-link to associated m (nil if idle)

mcache *mcache

// Queue of runnable goroutines. Accessed without lock.

runqhead uint32

runqtail uint32

runq [256]guintptr

// runnext, if non-nil, is a runnable G that was ready'd by

// the current G and should be run next instead of what's in

// runq if there's time remaining in the running G's time

// slice. It will inherit the time left in the current time

// slice. If a set of goroutines is locked in a

// communicate-and-wait pattern, this schedules that set as a

// unit and eliminates the (potentially large) scheduling

// latency that otherwise arises from adding the ready'd

// goroutines to the end of the run queue.

runnext guintptr

// Available G's (status == Gdead)

gfree *g

}

Code snippet

尝试执行下面的代码,会发现这其实是一个死循环,最后的打印结果永远得不到输出。

package main

import "fmt"

import "time"

import "runtime"

func main() {

var result int

processors := runtime.GOMAXPROCS(0)

for i := 0; i < processors; i++ {

go func() {

for { result++ }

}()

}

time.Sleep(time.Second) //wait for go function to increment the value.

fmt.Println("result =", result)

}

在Golang运行时,创建的OS Threads最多等于GOMAXPROCS,Goroutine就在这有限的OS Threads上被调度执行。

在代码中,当前并行运行的Goroutine全部用来做无限循环的累加操作,运行数量等于GOMAXPROCS。而main是一个额外的Goroutine。根据Golang Scheduler的设定,因为其他Goroutine都在紧张的运行,调度器并不会将其中的任何一个Goroutine挂起,所以main goroutine永远不会被调度执行。

在实际开发中,因为存在诸如channel或者Api requeest等情况,程序hang住的可能行并不大。

参考文章: